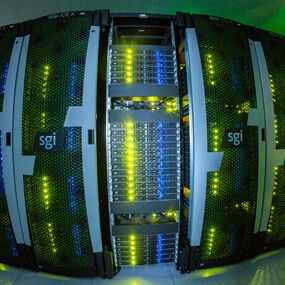

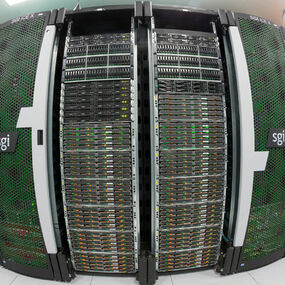

Salomon was a petaflop class system consisting of 1,008 computational nodes. Each node was equipped with 24 cores (two twelve-core Intel Haswell processors). These computing nodes were interconnected by InfiniBand FDR and Ethernet networks.

There were two types of compute nodes:

- 576 compute nodes without any accelerator,

- 432 compute nodes with MIC accelerators (two Intel Xeon Phi 7120P per node).

Each node was equipped with two 2.5 GHz processors and 128 GB RAM. The total theoretical peak performance of the cluster exceeded 2 PFlop/s with an aggregated LINPACK performance of over 1.5 PFlop/s. All computing nodes shared a 500 TB/home disk storage to store user files. A 1,638 TB shared storage was available for scratch and project data.

Technical information of the salomon supercomputer

| put into operation | summer 2015 |

|---|---|

| Theoretical peak performance | 2,011 TFlop/s |

| operating system | Centos 64 bit 7.x |

| compute nodes | 1,008 |

| CPU | 2x Intel Haswell, 12 cores, 2,5 GHz, 24,192 cores in total |

| RAM per compute node | 128 GB, 3,25 TB UV compute node |

| accelerators | 864x Intel Xeon Phi 7120P |

| storage | 500 TB / home (6 GB/s), 1 638 TB / scratch (30 GB/s) |

| interconnect | Infiniband FDR 56 Gb/s |

Learn more at docs.it4i.cz.