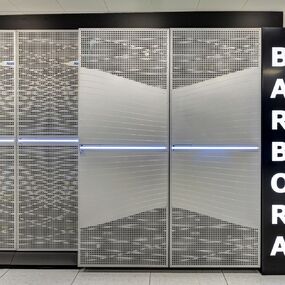

The Barbora supercomputer was installed in autumn 2019 with a theoretical peak performance of 849 TFlop/s.

The computing power consists of:

- 192 standard computational nodes; each node is equipped with two 18-core Intel processors and 192 GB RAM,

- 8 compute nodes with GPU accelerators; each node is equipped with two 12-core Intel processors, four NVIDIA Tesla V100 GPU accelerators with 16 GB of HBM2 and 192 GB of RAM,

- 1 fat node is equipped with eight 16-core Intel processors and 6 TB RAM.

The supercomputer is built on the Bull Sequana X architecture and for cooling its standard compute nodes the direct liquid cooling technology is used. The computing network is built on the latest Infiniband HDR technology. The SCRATCH computing data storage capacity is 310 TB with 28 GB/s throughput using Burst Buffer acceleration. Another computing data storage is NVMe over Fabric with a total capacity of 22.4 TB dynamically allocated to compute nodes. It is also equipped with the Bull Super Computer Suite cluster operation and management software solution as well as Slurm scheduler and resource manager. The computing network is built with the latest Infiniband HDR technology.

technical information of the barbora supercomputer

| put into operation | autumn 2019 |

|---|---|

| Theoretical peak performance | 849 TFlop/s |

| operating system | CentOS 64bit 7.x |

| compute nodes | 201 |

| CPU | 2 x Intel Cascade Lake, 18 cores, 2.6 GHz |

| RAM per compute node | 192 GB / 6 TB fat node |

| accelerators | 32x NVIDIA Tesla V100 |

| storage | 29 TB / home, 310 TB / scratch (28 GB/s) |

| interconnect | Infiniband HDR 200 Gb/s |

Learn more at docs.it4i.cz.

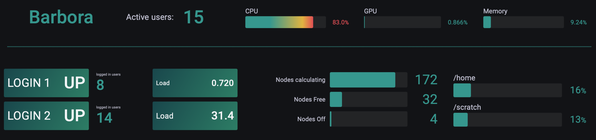

cluster utilization of the barbora supercomputer

Dashboard barbora

View current Barbora supercomputer data.

Dashboards display the current state of the supercomputer. The displayed values are:

- utilization

- node allocation

- filling HOME and SCRATCH repositories

- statistics of Slurm tasks and TOP7 software modules

- the number of days of operation since the last outage and the date of any planned new outage

- MOTD (Message of the Day)

- responsible for the Service of the day