Anselm was the first ever supercomputer at IT4Innovations with a theoretical peak performance of 94 TFlop/s and was operated from 2013 to 2021.

During its operation, more than 2.6 million computing jobs were run on it in 725 research projects in fields such as material sciences, computational chemistry, biosciences, and engineering. At the end of January 2021, its operation permanently ceased and Anselm was moved to the World of Civilization exhibition in Dolní/Lower Vítkovice.

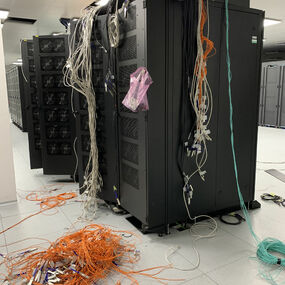

Anselm consisted of 209 computational nodes. Each node was equipped with 16 cores (two eight-core Intel Sandy Bridge processors). These compute nodes were interconnected by InfiniBand (QDR) and Ethernet networks.

There were 4 types of compute nodes:

- 180 compute nodes without any accelerator, with 2.4 GHz CPUs and 64 GB RAM,

- 23 compute nodes with GPU accelerators (NVIDIA Tesla K20), with 2.3 GHz CPUs and 96 GB RAM,

- 4 compute nodes with MIC accelerators (Intel Xeon Phi 5110P), with 2.3 GHz CPUs and 96 GB RAM,

- 2 fat nodes with larger RAM and faster storage (2.4 GHz CPUs, 512 GB RAM and two SSD drives).

The total theoretical peak performance of the whole cluster was 94 TFlop/s with the maximal LINPACK performance of 73 TFlop/s.

technical information of the ANSELM supercomputer

| put into operation | summer 2013 |

|---|---|

| termination of operation | January 2021 |

| Theoretical peak performance | 94 TFlop/s |

| operating system | RedHat Linux 64bit 6.x |

| compute nodes | 209 |

| CPU | 2x Intel SandyBridge, 8 cores, 2,3 / 2,4 GHz, 3,344 cores in total |

| RAM per compute node | 64 GB / 96 GB / 512 GB |

| accelerators | 4x Intel Xeon Phi 5110P 23x NVIDIA Tesla K20 (Kepler) |

| storage | 320 TiB / home (speed 2 GB /s), 146 TiB / scratch (speed 6 GB/s) |

| interconnect | Infiniband QDR 40 Gb/s |

Learn more at docs.it4i.cz.